Enablement Impact is a Sliding Scale

Introduction

As usual, I want to talk about making an impact with enablement. But this week I want to dig into a specific aspect, which is that “impact” means different things to different people. In reality, it is a sliding scale.

If you’re not aware that I believe you can make a significant impact with enablement (and have proven it), you are either not reading my posts or aren’t paying attention. I’m harping on the fact, incessantly, and purposefully.

At the same time, I want to recognize the sliding scale of impact, and the importance of aligning with executive expectations and applying the right efforts and measurement strategy to the right initiatives.

What does the sliding scale look like? Glad you asked.

I should add that this scale is not written in stone or absolute. This is an example — a representation. You can probably come up with your own or feel free to edit. It’s not rocket science — the impacts start low on the left and increase as you move to the right.

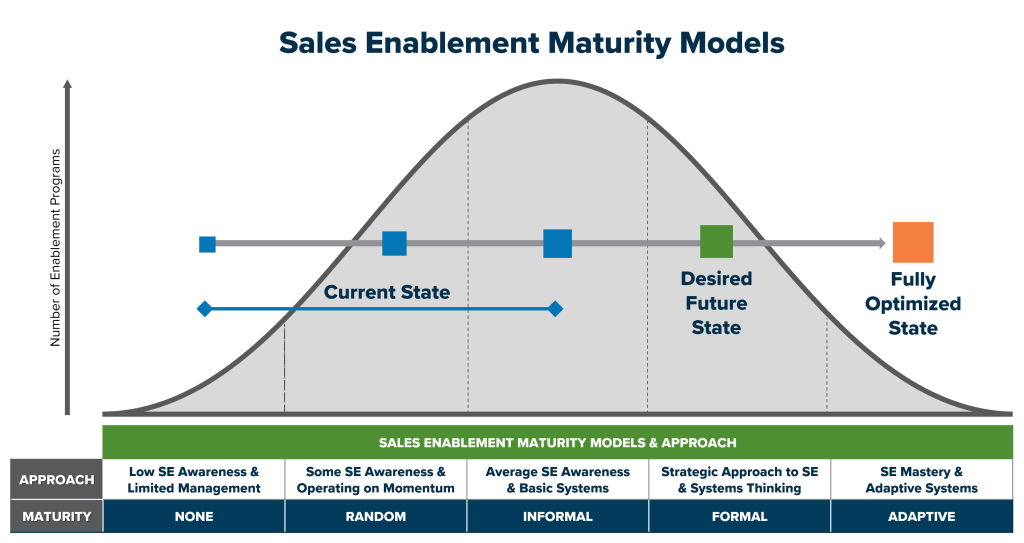

You might even think of it as being aligned with the sales enablement maturity model.

The difference, in my mind, is that the goal with the maturity model is to get to the Formal maturity model as quickly as possible and to evolve to the Adaptive stage (performance consulting), as possible. With the Sliding Scale of Enablement Impact, I believe you should determine the impact and approach, project by project, and then behave accordingly.

If you try to do everything at the highest possible level of impact, you’ll likely crash and burn. A sales methodology implementation is an all-out, long-term change management project with significant impact potential. A quarterly product update is important, of course, especially the sales readiness for prospect and customer conversations, but it’s not the same level of effort or impact.

- For the sales methodology implementation, you’ll want to focus on long-term adoption and likely show the impact on things like overall sales productivity (revenue per rep), sales velocity and its four metrics (number of qualified opportunities worked, average sales cycle, average deal size, win rates), or other metrics that matter to your leaders, and very possibly the ROI for the project for the first full year. This would be the far-right side of the Sliding Scale of Enablement Impact.

- For the quarterly product update, you might get to impact by showing cross-sell, upsell, or sales lift when the new features or solution is sold, but if the updates are simple enough you might be measuring for certification or training completion, conversation intelligence keywords (new product discovery questions and appropriate presentations)

So, technically, you could be operating at the Formal maturity model, generally, and still be approached about an initiative or project after your strategic enablement plan is in play. And at that time, you’d need to determine where you should be on the sliding scale of enablement impact, for that initiative (if you do it, which is always another possible discussion).

Gauging Your Enablement Initiatives

There are several things at play in how to approach an enablement initiative, relative to this sliding scale:

- What is the executive (or your manager’s) expectation? Where does it fall on the sliding scale?

- How critical is the initiative to the success of the sales force?

- How big is the effort?

- How difficult will the change management be?

- How does the initiative fit with the current strategic enablement plan that’s in place? (You do have one in place, of course, right?)

- Can it be done to the expectation (impact level) without changing or jeopardizing your current plan?

- Or, how does the plan need to be altered to accommodate the new initiative?

A Tale of Two Leaders

I once dotted-lined to two sales leaders. One was responsible for all sales results, domestic and international (VP, Sales), the other led the US field sales team (VP, Field Sales, US). The field leader reported to the other, so don’t ask me why I reported to both, but I did.

The VP, Sales was very strategic and focused. The US field leader was an energic, “idea-a-minute” guy. Both were very successful and respected in the company, but they worked very differently. My office was right next to the field VP’s office, and he was in and out of my office 5-6 times a day, brimming with ideas and things he wanted to try. He funneled me magazine articles weekly, sometimes photocopied; sometimes ripped out of the magazine. Meanwhile, the VP, Sales was holding me accountable for executing my submitted and approved plan (which both he and the field VP approved).

You see the clash, right?

I started to carry a copy of my folded-up plan in the back pocket of my suit pants. It was like my second wallet. When the field VP (we’ll call him Ted; not his real name) popped in or caught me in a hallway or invited me to his office, I always had my plan with me. When he asked me to do something outside the plan, I’d pull out my plan document, lay it on the desk, and ask how he saw it fitting in, or what he’d like to discuss displacing or pushing. I was calm, polite, respectful, curious, and businesslike. I asked a lot of questions. Ted actually respected the approach. Eventually, we aligned on:

- Not doing the project

- Not doing the project right now, but fitting it into the plan somewhere

- Doing the project, in a minimal way, or as a test

- Displacing something in the current plan (required a caucus and consensus with the VP, Sales)

- Funneling the project to someone else

Then, when we moved forward, we aligned on the objectives, expected outcomes, and measurement plan, if any – all the stuff in the Sliding Scale of Enablement Impact.

And, just so it’s said, for clarity and fairness, I learned a ton from working with Ted (and the VP, Sales), had a great working relationship with both, and actually followed Ted to another company.

So, What Does “Impact” Mean?

Let’s get one level more specific. On the sliding scale the words I used are:

- Accomplished a Requested Activity

- Achieved a Stated Objective

- Moved the Needle on an Important Metric

- Revenue or Profitability Impact Shown

- Significant Financial ROI Attributed

Let’s look at an example of each. Except for the first one, you’ll find all of these impacts on the above “What’s Possible with Enablement” graphic.

- Accomplished a Requested Activity: Supported a new product launch with 100% training completion prior to launch date.

- Achieved a Stated Objective: Decreased new hire ramp-up time by 52%.

- Moved the Needle on an Important Metric: Increased sales per rep by 47% year-over-year (YoY).

- Revenue or Profitability Impact Shown: Increased sales per rep 90 days post-training by 23% – an accretive increase of $36.6MM/year.

- Significant Financial ROI Attributed: $398MM YoY revenue increase, $9.96MM net profit increase, for a 3,884% ROI.

Are all of those “impacts?” While I personally prefer the ones further down the list, each of these was considered a positive accomplishment by the senior-most sales leader I reported to at the time, or by the sales leader who hired me as a consultant. It’s what they wanted, and I delivered.

Now, did “I” do it? No, of course not. In enablement, or as a consultant, you have influence without authority. But you (and maybe with the team you led and empowered) spearheaded the initiative – you rallied those who did the work or held others accountable – you created the systems, the processes, and the workflows that helped people get better results.

You got things done through others – the classic definition of leadership.

Surprisingly, in some companies and for some sales leaders, they sincerely did not care if I fully-evaluated the impact using Kirkpatrick- or Phillips-like measures (reaction, learning, application, results, ROI). In a few others, a minimum of results measurement and reporting was expected, and in several cases in my career, a full ROI analysis was demanded.

Some Advice About Safety Nets

Here’s a piece of advice, for what it’s worth. Remember the old advice that was often given to classroom instructors to dress one level up from your attendees? (You may or not be old enough to have heard that old adage.) My advice for measurement and impact reporting is similar.

If execs just cared to know behaviors changed and reps or managers were applying what was taught, I went one step further to measure pre-/post-initiative results. If they just wanted to know that learning occurred, I worked to judge application and adoption. If they wanted to see results improve, I measured and reported for that, but calculated ROI, as well. I didn’t always report on what execs didn’t ask about, or ask for, but I had it – if for no other reason, for my own edification and as a safety net for me and my team.

The Attribution Minefield

I’ve written about this in detail elsewhere, but if we’re talking about measuring and reporting impact, it’s worth a brief mention. Anytime there is a hint that I’ll be measuring and reports on pre-/post-initiative results to prove impact, I start with all my charter stakeholders and cross-functional collaborators who are involved with the initiative or who may be influencing sales force performance during the measurement period, and get aligned on how things will be measured, reported, and attributed.

I often say, jokingly, that:

All impact reporting is a lie. The goal is to get together and agree

on the lies we’ll all believe.

– Mike Kunkle

Okay, that’s hyperbole, to make a point. But the point is valid that you need to gain agreement upfront on how you will track, measure, analyze, report, and attribute results. And you need to include the leaders you’ll be reporting to, and take their feedback upfront, rather than have your analysis chewed up and spit out as hogwash later. Trust me on that. I learned the hard way, very early in my career. It’s a valuable lesson, so do yourself a favor and learn from the mistakes of other, rather than going through it. It’s hard to do it upfront, but a lot less hard than to be crushed afterward.

Closing Thoughts

As we wrap up this post, let’s reflect on the essence of making an impact with enablement. Remember, “impact” isn’t a one-size-fits-all concept—it’s a sliding scale that varies based on context and expectations.

It’s crucial to align with executive expectations and strategically apply your efforts and measurement strategies. Not every initiative demands the highest level of impact. Tailor your approach to fit the specific project and its importance, ensuring sustainable success without overwhelming your resources.

Whether you’re tackling a major sales methodology implementation or a quarterly product update, gauge the right level of effort and impact. Clear communication and alignment with stakeholders are key. Set expectations upfront and agree on how to measure and report results to avoid potential pitfalls and ensure your efforts are recognized. For your own safety, and perhaps your sanity, consider going one step further in your analysis than what is expected. It can’t hurt, and it can provide valuable insights and learnings.

Ultimately, our goal is to drive positive outcomes for our organization. By being adaptable, strategic, and proactive, we can continue to make a significant impact and support the success of our sales force.

Are you ready to coach your team toward truly understanding their buyers?

Download our Infographic | Are You Really Understanding Your Buyers for a powerful framework to enhance communication with your buyers. The ACC communication model helps sellers acknowledge, clarify, and confirm buyer information—building trust and driving better results.

You can read Mike’s original post here.

Previous

Next